AI voice scamming can turn an ordinary call into something that feels urgent and personal. The same trick extends through a fake Discord message to push people into a quick decision.

Fox 4 Now reports that Americans lost more than $12.5 billion to this scheme, following a 241% rise in scams after the pandemic. In this post, we’ll walk you through what it is, how to catch early warning signs, steps you can take to protect yourself, and a real case that shows just how big the problem has become.

Need support after a scam? Join our community today.

What Is AI Voice Scamming?

AI voice scamming is a form of digital fraud where scammers use artificial intelligence to clone human voices and trick people. In simple terms, it is based on voice cloning, audio deepfakes, and voice synthesis to create imitations that sound almost identical to genuine speech.

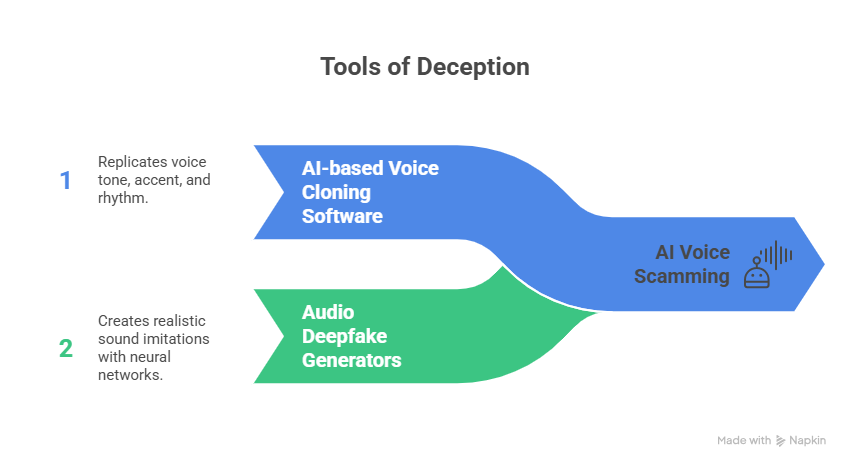

The problem has grown so quickly that it’s now a real concern in cybersecurity. According to Axios, with just three to five seconds of recorded audio, criminals can create a convincing copy of someone’s voice. To pull this off, scammers often use tools such as:

- AI-based voice cloning software: Platforms designed to replicate tone, accent, and rhythm.

- Audio deepfake generators: Applications that use neural networks to produce highly realistic sound imitations.

- Voice synthesis APIs: Services that let users generate customized voices from text combined with short audio clips.

How to Spot an AI Voice Scam?

Catching an AI voice scamming attempt isn’t always simple, but there are some clues you can look out for. The good news is that learning to recognize the signs makes it easier to act fast and stay safe.

Here are four of the most common warning signs:

1. Voices That Sound Too Perfect

Human language is never flawless. In everyday conversations, you’ll hear pauses, breaths, changes in tone while speaking, or filler words, and those little details make a voice feel natural.

- Example: Imagine receiving a call from someone claiming to be your brother. The voice sounds just like his, but there are no pauses, laughs, or background noise you’d normally expect. That level of perfection should raise suspicion.

2. Requests With Emotional Urgency

One common tactic scammers use in AI voice scamming is to play with emotions, which is why they often pressure people when they’re most vulnerable. They often create fake emergencies to spark fear or anxiety and push for immediate action.

- Example: You receive a call that sounds like your daughter, saying she has a legal problem and urgently needs money. The desperate tone and the urge to keep it secret are clear signs of a scam attempt.

3. Demands For Money Or Sensitive Data

Direct requests for money or personal information are another warning signal of AI voice scamming. If a caller asks you to transfer funds, share passwords, or give out verification codes without any way to confirm the request, it’s a very important alert sign.

- Example: You get a call in what sounds like your boss’s voice, asking for the company’s access codes right away because “a system is failing”. That kind of demand without verification points to attempted fraud.

4. Calls Imitating Known Numbers (Spoofing)

Spoofing is a frequent trick in AI voice scamming that takes advantage of the trust people place in caller ID. It allows scammers to hide their real number and display one that looks legitimate, like a bank, a company, or even a family member.

- Example: You receive a call that appears to come from your bank. The voice claims your account is at risk and asks for your password to “protect it.” Even if the number looks correct, the request is a scam.

How Scammers Obtain Voice Recordings?

For AI voice scamming to work, scammers need one thing above all: your voice. The worrying part is that collecting it doesn’t take much effort. In daily life, people leave short audio snippets online without realizing they could be exploited.

The most common ways scammers capture these samples include:

- Social media: Voice notes in messaging apps, posts on Facebook, or Instagram stories.

- Public videos: Podcasts, live streams, or interviews that stay available online.

- Voice messages: Clips shared on WhatsApp or Telegram that can later be saved and reused.

- Old calls: Stored files on devices, archived voicemails, or leaked audio.

What makes this worse is that scammers don’t need long recordings; a few seconds often train voice models well enough. That means anyone who shares audio without thinking becomes an easy target for voice cloning and audio deepfakes.

How to Tell if a Voice Is AI-Generated?

AI voice scamming works because many people still find it difficult to tell the difference between a real voice and one created with technology. Even though synthetic voices sound more convincing every day, there are easy ways to analyze them and spot warning signs.

1. Use Digital Tools

The rise of AI voice scamming has led to apps and programs that check voice clips in detail. These programs don’t replace human judgment, but they highlight patterns our ears usually miss, like unnatural sound structures or hidden marks from AI models.

How to put this into practice:

- Deepfake detection apps: Upload a file and let the tool check for synthetic voice patterns.

- File metadata review: Some platforms reveal if a clip carries digital watermarks from cloning software.

- Spectral analysis software: These programs create visual graphs that make it easier to compare real and artificial voices.

- Combine methods: Using more than one tool gives you stronger evidence to confirm suspicions.

2. Try Unscripted Questions

Cloned voices are usually trained with common phrases or public recordings. That helps them sound real, but it limits their ability to improvise. A simple way to expose them is to ask unexpected questions that demand genuine answers.

How you can do this in practice:

- Ask about recent details: Bring up something that happened minutes ago or a personal routine only the real person knows.

- Mention time or place: Questions like “Where were you yesterday at 7 p.m.?” are hard for synthetic voices to answer.

- Check fluency: Long pauses, broken sentences, or generic replies point to AI

- Switch topics suddenly: A cloned voice trained on limited data usually struggles when the subject changes.

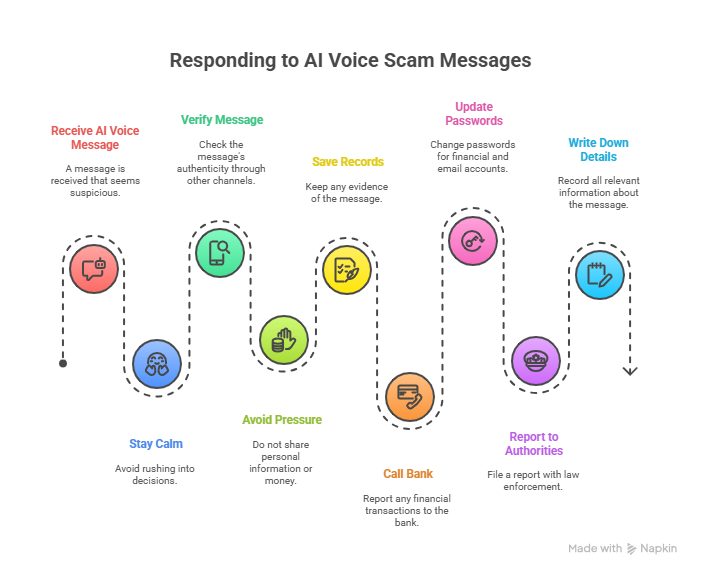

What to Do After Receiving an AI Voice Message?

If you ever get a message that sounds like an AI voice scamming, the first step is simple: stay calm. Scammers count on people reacting in a rush. Take a moment to pause, think, and double-check before you respond.

Immediate steps you can take

- Verify through another channel: If the caller affirms to be a family member or colleague, try reaching them with a separate call or send a quick text.

- Don’t give in to pressure: Avoid sending money or sharing personal data until you’re sure about the person’s identity.

- Save records: Keep the audio, screenshots, or any other detail that could later serve as evidence.

If you have already shared money or information

- Call your bank right away: Block accounts or cards and ask them to track any unusual activity.

- Update your passwords and codes: Start with financial services and email accounts.

- Report the case to authorities: Filing a report can help limit the damage and support investigations.

- Write down every detail: The time, phone number, and what was requested. This gives cybersecurity teams and legal specialists the context they need to act.

Have questions about dealing with scams? Contact us for support.

Real Case: A Man Was Tricked by an AI That Cloned His Son’s Voice

AI voice scamming has shifted from a distant concern to a real threat. Anthony, a Los Angeles resident, lost $25,000 after answering a call that used a cloned version of his son’s voice. According to AB7, the imitation was so similar in tone and emotion that it was almost impossible to differentiate it from the real one.

During the call, scammers pretended there was an emergency: his son was in legal trouble and needed money fast. The cloned voice sounded scared and desperate, which pushed Anthony to react emotionally instead of stopping to think.

Two factors explain why the scam worked:

- Deepfake audio recreated his son’s speech with pauses, tone shifts, and even familiar expressions.

- Emotional pressure framed the situation as a crisis demanding immediate action.

Anthony never confirmed the story with a separate call, text, or trusted channel. That lack of verification gave scammers the opening they needed to complete the fraud.

👉 Check fake websites examples have caused real losses, so you don’t get caught in schemes that risk your money and data.

Tips For Protecting Yourself From AI Voice Scamming

Dealing with AI voice scamming means paying attention to simple habits that minimize the chance of being tricked. The best defense is to add extra steps that make it harder for scammers to alter your voice:

- Review app permissions: Check which apps ask for microphone access or use voice recognition, and adjust any settings that don’t feel necessary.

- Avoid sharing voice clips: Refrain from posting audio on public platforms. Even a few seconds can be enough to create a convincing clone.

- Use company protocols: In work settings, agree on extra steps such as a safe word or requiring a second call before approving transfers.

- Keep devices updated: Regular updates on phones and computers often fix weaknesses that scammers might try to exploit.

Stay Safe: Don’t Fall for AI Voice Scamming

AI voice scamming uses voice cloning to create calls that sound real while hiding an attempt to steal from you. This type of fraud works because it creates urgency and uses familiar voices to build instant trust.

That’s why spotting the warning signs and taking quick steps to verify must become part of everyday security. At Cryptoscam Defense Network, we stand by your side so you can detect fake profiles or even Binance scammers on your own. Our team helps you at every stage to keep your protection strong and your information safe.

✅ Download our Fraud Report Toolkit to easily collect, organize, and report scam cases, with dropdowns for scam types, payment methods, platforms, and direct links to agencies like the FTC, FBI IC3, CFPB, BBB, and more.

We Want to Hear From You!

Fraud recovery is hard, but you don’t have to do it alone. Our community is here to help you share, learn, and protect yourself from future fraud.

Why Join Us?

- Community support: Share your experiences with people who understand.

- Useful resources: Learn from our tools and guides to prevent fraud.

- Safe space: A welcoming place to share your story and receive support.

Find the help you need. Join our Facebook group or contact us directly.

Be a part of the change. Your story matters.